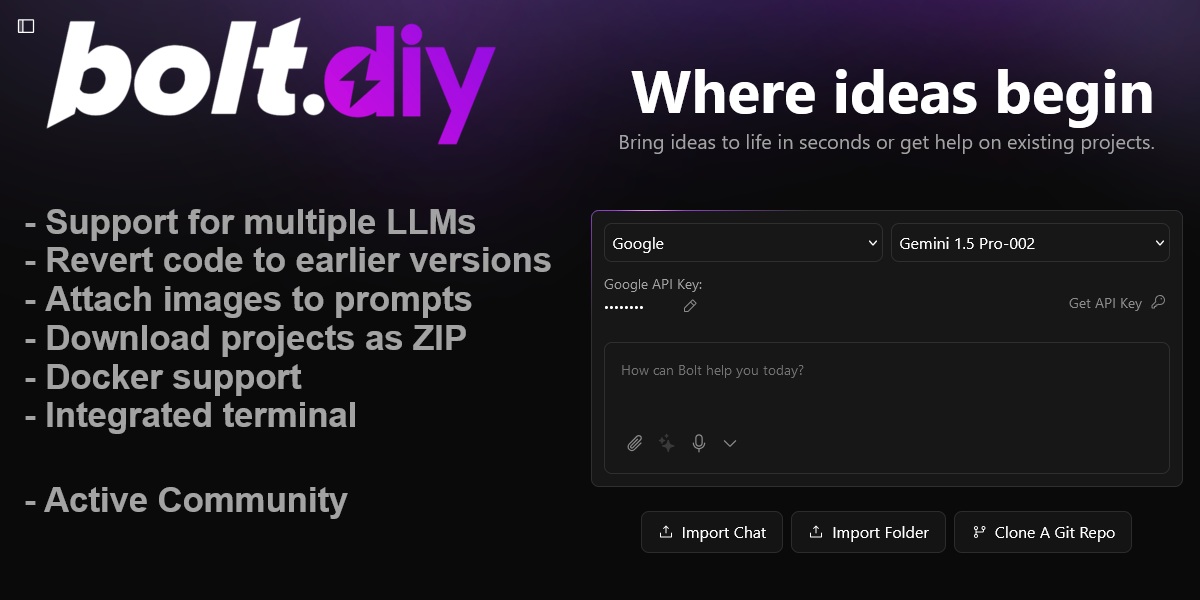

Bolt.diy: The Open Source Version 🚀

Last updated [12/11/24] - for latest news, visit the GitHub repo.

Supported LLM Providers

Currently supported models include:

- OpenAI

- Anthropic

- Ollama

- OpenRouter

- Gemini

- LMStudio

- Mistral

- xAI

- HuggingFace

- DeepSeek

- Groq

And it’s easily extendable to support any model compatible with the Vercel AI SDK!

Community & Support

- Documentation: Check out the Bolt.diy Docs

- Community: Join the discussion at Think Tank

- GitHub: Access the code at stackblitz-labs/bolt.diy

Recent Community Achievements ✅

AI Integration

- OpenRouter Integration

- Gemini Integration

- DeepSeek API Integration

- Mistral API Integration

- xAI Grok Beta Integration

- LM Studio Integration

- HuggingFace Integration

- Cohere Integration

- Together Integration

Development Features

- Autogenerate Ollama models

- Filter models by provider

- Download project as ZIP

- One-way file sync to local folder

- Docker containerization

- GitHub project publishing

- UI-based API key management

- Bolt terminal for LLM output

- Code output streaming

- Code version control

- Dynamic model token length

- Prompt caching and enhancement

- Local project import

- Mobile-friendly interface

- Image attachment for prompts

- Auto package detection and installation

High Priority Roadmap ⚡

-

Performance Optimization

- Prevent frequent file rewrites using file locking and diffs

- Optimize prompting for smaller LLMs

- Run agents in backend vs single model calls

-

Deployment & Integration

- Direct deployment to Vercel/Netlify

- VSCode Integration with git-like confirmations

- Azure Open AI API Integration

- Perplexity Integration

- Vertex AI Integration

-

Enhanced Features

- Project planning in MD files

- Document upload for knowledge base

- Voice prompting

What Makes Bolt Different?

Full-Stack Browser Development

- Install and run npm packages

- Execute Node.js servers

- Interact with third-party APIs

- Deploy to production from chat

- Share via URL

Complete Environment Control

Unlike traditional AI coding assistants, Bolt.diy gives AI models control over:

- Filesystem

- Node server

- Package manager

- Terminal

- Browser console

This enables AI agents to manage the entire application lifecycle from creation to deployment.

Getting Started

Visit the official repository for detailed setup instructions and contribution guidelines.

Contributing

The project welcomes contributions! Whether you’re fixing bugs, adding features, or improving documentation, check out the contribution guidelines to get started.